mixed-reality

Review the common steps to make sure your development environment is set up correctly. To port your existing Unity content, follow these steps:

1. Upgrade to the latest public build of Unity with Windows MR support

- Save a copy of your project before you get started.

- Download the latest recommended public build of Unity with Windows Mixed Reality support.

- If your project was built on an older version of Unity, review the Unity Upgrade Guides.

- Follow the instructions for using Unity’s automatic API updater.

- See if you need to make any other changes to get your project running, and work through any errors and warnings.

2. Upgrade your middleware to the latest versions

With any Unity update, you might need to update one or more middleware packages that your game or application depends on. Updating to the latest middleware increases the likelihood of success throughout the rest of the porting process.

3. Target your application to run on Win32

From inside your Unity application:

- Navigate to File > Build Settings.

- Select PC, Mac, Linux Standalone.

- Set target platform to Windows.

- Set architecture to x86.

- Select Switch Platform.

[!NOTE] If your application has any dependencies on device-specific services, such as match making from Steam, disable them now. You can hook up the Windows equivalent services later.

4. Add support for the Mixed Reality OpenXR Plugin

-

Choose and install a Unity version and XR plugin. While the Unity 2020.3 LTS with the Mixed Reality OpenXR plugin is best for Mixed Reality development, you can also build apps with other Unity configurations.

-

Remove or conditionally compile out any library support specific to another VR SDK. Those assets might change settings and properties on your project in ways that are incompatible with Windows Mixed Reality.

For example, if your project references the SteamVR SDK, update your project to instead use Unity’s common VR APIs, which support both Windows Mixed Reality and SteamVR.

- In your Unity project, target the Windows 10 SDK.

- For each scene, set up the camera.

5. Set up your Windows Mixed Reality hardware

- Review steps in Immersive headset setup.

- Learn how to Use the Windows Mixed Reality simulator and Navigate the Windows Mixed Reality home.

6. Use the stage to place content on the floor

You can build Mixed Reality experiences across a wide range of experience scales. If you’re porting a seated-scale experience, make sure Unity is set to the Stationary tracking space type:

XRDevice.SetTrackingSpaceType(TrackingSpaceType.Stationary);

This code sets Unity’s world coordinate system to track the stationary frame of reference. In the Stationary tracking mode, content you place in the editor just in front of the camera’s default location (forward is -Z) appears in front of the user when the app launches. To recenter the user’s seated origin, you can call Unity’s XR.InputTracking.Recenter method.

If you’re porting a standing-scale experience or room-scale experience, you’re placing content relative to the floor. You reason about the user’s floor using the spatial stage, which represents the user’s defined floor-level origin. The spatial stage can include an optional room boundary you set up during the first run.

For these experiences, make sure Unity is set to the RoomScale tracking space type. RoomScale is the default, but set it explicitly and ensure you get back true. This practice catches situations where the user has moved their computer away from the room they calibrated.

if (XRDevice.SetTrackingSpaceType(TrackingSpaceType.RoomScale))

{

// RoomScale mode was set successfully. App can now assume that y=0 in Unity world coordinate represents the floor.

}

else

{

// RoomScale mode was not set successfully. App can't make assumptions about where the floor plane is.

}

Once your app successfully sets the RoomScale tracking space type, content placed on the y=0 plane appears on the floor. The origin at (0, 0, 0) is the specific place on the floor where the user stood during room setup, with -Z representing the forward direction they faced during setup.

In script code, you can then call the TryGetGeometry method on the UnityEngine.Experimental.XR.Boundary type to get a boundary polygon, specifying a boundary type of TrackedArea. If the user defined a boundary, you get back a list of vertices. You can then deliver a room-scale experience to the user, where they can walk around the scene you create.

The system automatically renders the boundary when the user approaches it. Your app doesn’t need to use this polygon to render the boundary itself.

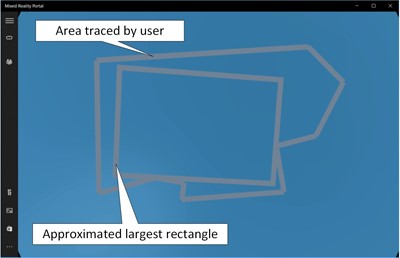

Example of results:

For more information, see Coordinate systems in Unity.

7. Work through your input model

Each game or application that targets an existing head-mounted display (HMD) has a set of inputs that it handles, types of inputs that it needs for the experience, and specific APIs that it calls to get those inputs. It’s simple and straightforward to take advantage of the inputs available in Windows Mixed Reality.

See the input porting guide for Unity for details about how Windows Mixed Reality exposes input, and how the input maps to what your application does now.

[!IMPORTANT] If you use HP Reverb G2 controllers, see HP Reverb G2 Controllers in Unity for further input mapping instructions.

8. Test and tune performance

Windows Mixed Reality is available on many devices, ranging from high end gaming PCs to broad market mainstream PCs. These devices have significantly different compute and graphics budgets available for your application.

If you ported your app using a premium PC with significant compute and graphics budgets, be sure to test and profile your app on hardware that represents your target market. For more information, see Windows Mixed Reality minimum PC hardware compatibility guidelines.

Both Unity and Visual Studio include performance profilers, and both Microsoft and Intel publish guidelines on performance profiling and optimization.

For an extensive discussion of performance, see Understand performance for Mixed Reality. For specific details about Unity, see Performance recommendations for Unity.

Input mapping

For input mapping information and instructions, see Input porting guide for Unity.

[!IMPORTANT] If you use HP Reverb G2 controllers, see HP Reverb G2 Controllers in Unity for further input mapping instructions.