mixed-reality

Scene understanding SDK overview

Scene understanding transforms the unstructured environment sensor data that your Mixed Reality device captures and converts it into a powerful abstract representation. The SDK acts as the communication layer between your application and the Scene Understanding runtime. It’s aimed to mimic existing standard constructs, such as 3D scene graphs for 3D representations, and 2D rectangles and panels for 2D applications. While the constructs Scene Understanding mimics will map to concrete frameworks, in general SceneUnderstanding is framework agnostic allowing for interoperability between varied frameworks that interact with it. As Scene Understanding evolves the role of the SDK is to ensure new representations and capabilities continue to be exposed within a unified framework. In this document, we will first introduce high-level concepts that will help you get familiar with the development environment/usage and then provide more detailed documentation for specific classes and constructs.

Where do I get the SDK?

The SceneUnderstanding SDK is downloadable via the Mixed Reality Feature Tool. Note: the latest release depends on preview packages and you’ll need to enable pre-release packages to see it.

For version 0.5.2022-rc and later, Scene Understanding supports language projections for C# and C++ allowing applications to develop applications for Win32 or UWP platforms. As of this version, SceneUnderstanding supports unity in-editor support barring the SceneObserver, which is used solely for communicating with HoloLens2. SceneUnderstanding requires Windows SDK version 18362 or higher.

Conceptual Overview

The Scene

Your mixed reality device is constantly integrating information about what it sees in your environment. Scene Understanding funnels all of these data sources and produces one single cohesive abstraction. Scene Understanding generates Scenes, which are a composition of SceneObjects that represent an instance of a single thing, (for example, a wall/ceiling/floor.) Scene Objects themselves are a composition of [SceneComponents, which represent more granular pieces that make up this SceneObject. Examples of components are quads and meshes, but in the future could represent bounding boxes, collision meshes, metadata etc.

The process of converting the raw sensor data into a Scene is a potentially expensive operation that could take seconds for medium spaces (~10x10m) to minutes for large spaces (~50x50m) and therefore it is not something that is being computed by the device without application request. Instead, Scene generation is triggered by your application on demand. The SceneObserver class has static methods that can Compute or Deserialize a scene, which you can then enumerate/interact with. The “Compute” action is executed on-demand and executes on the CPU but in a separate process (the Mixed Reality Driver). However, while we do compute in another process the resulting Scene data is stored and maintained in your application in the Scene object.

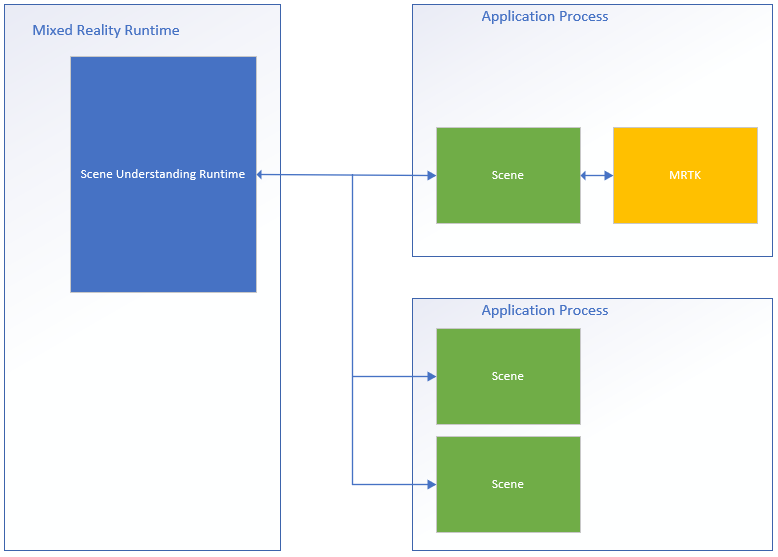

Below is a diagram that illustrates this process flow and shows examples of two applications interfacing with the Scene Understanding runtime.

On the left-hand side is a diagram of the mixed reality runtime, which is always on and running in its own process. This runtime is responsible for performing device tracking, spatial mapping, and other operations that Scene Understanding uses to understand and reason about the world around you. On the right side of the diagram, we show two theoretical applications that make use of Scene Understanding. The first application interfaces with MRTK, which uses the Scene Understanding SDK internally, the second app computes and uses two separate scene instances. All three Scenes in this diagram generate distinct instances of the scenes, the driver isn’t tracking global state that is shared between applications and Scene Objects in one scene aren’t found in another. Scene Understanding does provide a mechanism to track over time, but this is done using the SDK. Tracking code is already running in the SDK in your app’s process.

Because each Scene stores its data in your application’s memory space, you can assume that all functions of the Scene object or its internal data are always executed in your application’s process.

Layout

To work with Scene Understanding, it may be valuable to know and understand how the runtime represents components logically and physically. The Scene represents data with a specific layout that was chosen to be simple while maintaining an underlying structure that is pliable to meet future requirements without needing major revisions. The Scene does this by storing all Components (building blocks for all Scene Objects) in a flat list and defining hierarchy and composition through references where specific components reference others.

Below we present an example of a structure in both its flat and logical form.

| Logical Layout | Physical Layout | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|